Define and load dataset

Deep learning models need a large amount of data to do training and evaluation. These data samples may be images, text, audio, and other types. However, the actual training process of the model is a mathematical calculation process. Therefore, the data samples need to go through a series of processing before they are fed into the model, such as converting data formats, dividing data sets, transforming data shapes, making data iterators for batch training, etc.

In the tensorlayerx framework, the dataset can be defined and loaded through the following two core steps:

Define dataset:Map the original images, text, and other samples saved on the disk and the corresponding labels to Dataset for easy reading later through indexing. In Dataset, some data transformations, data augmentation, and other preprocessing operations can also be performed. In the tensorlayerx framework, it is recommended to use tensorlayerx.dataflow.Dataset to define the dataset. In addition, some classic datasets are built in the tensorlayerx.files.dataset_loaders directory of tensorlayerx for easy direct call.

Iterate to load dataset:Automatically batch, shuffle, and other operations on the samples of the dataset to facilitate iterative reading during training. It also supports multi-process asynchronous reading to speed up data reading. In the tensorlayerx framework, the tensorlayerx.dataflow.DataLoader can be used to iterate to load the dataset.

This article introduces the image dataset as an example.

1. Define dataset

1.1 Load built-in datasets directly

The TensorLayerX framework has built-in some classic datasets in the tensorlayerx.files.dataset_loaders directory, which can be called directly. The following code can be used to view the built-in datasets in the tensorlayerx framework.

import tensorlayerx

print('Datasets related to computer vision (CV) and natural language processing (NLP):', tensorlayerx.files.dataset_loaders.__all__)

Datasets related to computer vision (CV) and natural language processing (NLP): ['load_celebA_dataset',

'load_cifar10_dataset',

'load_cyclegan_dataset',

'load_fashion_mnist_dataset',

'load_flickr1M_dataset',

'load_flickr25k_dataset',

'load_imdb_dataset',

'load_matt_mahoney_text8_dataset',

'load_mnist_dataset',

'load_mpii_pose_dataset',

'load_nietzsche_dataset']

As can be seen from the print results, tensorlayerx has built-in CV domain MNIST, FashionMNIST, Cifar10, flickr1M, flickr25k, etc. datasets, and NLP domain Imdb, nietzsche, etc. datasets.

For example, the code for loading the built-in dataset is shown below.

import tensorlayerx as tlx

X_train, y_train, X_val, y_val, X_test, y_test = tlx.files.load_mnist_dataset(shape=(-1, 28, 28, 1))

X_train = X_train * 255

Built-in MNIST dataset has been divided into training set and test set.

We can build a subclass that inherits from tensorlayerx.dataflow.Dataset and implements the following three functions:

__init__: Complete the dataset initialization operation, and map the sample file path and corresponding label to a list on the disk.

__getitem__: Define how to get sample data when the specified index (index) is obtained, and finally return the corresponding index of a single data (sample data, corresponding label).

__len__: Return the total number of samples in the dataset.

from tensorlayerx.dataflow import Dataset

from tensorlayerx.vision.transforms import Normalize, Compose

class MNISTDataset(Dataset):

"""

Step 1: inherit the tensorlayerx.dataflow.Dataset class

"""

def __init__(self, data=X_train, label=y_train, transform=transform):

"""

Step 2: implement the __init__ function to initialize the dataset and map the samples and labels to a list

"""

self.data_list = data

self.label = label

self.transform = transform

def __getitem__(self, index):

"""

Step 3: implement the __getitem__ function to define how to get sample data when the specified index is obtained, and finally return the corresponding index of a single data (sample data, corresponding label)

"""

image = self.data_list[index].astype('float32')

image = self.transform(image)

label = self.label[index].astype('int64')

return image, label

def __len__(self):

"""

Step 4: implement the __len__ function to return the total number of samples in the dataset

"""

return len(self.data_list)

transform = Compose([Normalize(mean=[127.5], std=[127.5], data_format='HWC')])

train_dataset = MNISTDataset(data=X_train, label=y_train, transform=transform)

In the above code, a dataset class MNISTDataset is defined, and MNISTDataset inherits from the tensorlayerx.dataflow.Dataset base class and implements the __init__,__getitem__ and __len__ three functions.

In the __init__ function, the label file is read and parsed, and all the image paths image_path and corresponding labels are stored in a list data_list.

In the __getitem__ function, the method of obtaining the corresponding image data is defined by specifying the index, and the image is read, preprocessed, and the image label format is converted, and finally the image and corresponding label image, label are returned.

In the __len__ function, the length of the initialized data set list data_list is returned in the __init__ function.

And in the __init__ function and __getitem__ function, some data preprocessing operations can also be implemented, such as flipping, cropping, and normalization of images, and finally the processed single data (sample data, corresponding label) is returned. This operation can increase the diversity of image data and help to enhance the generalization ability of the model. The tensorlayerx framework has built-in dozens of image data processing methods in the tensorlayerx.vision.transforms module. For detailed usage, please refer to the Data Processing section.

Similarly, the following code can be used to directly iterate the custom dataset.

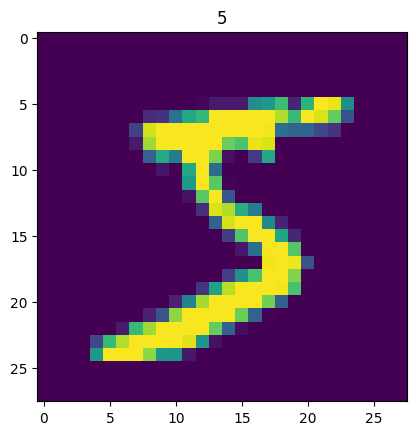

from matplotlib import pyplot as plt

for data in train_dataset:

image, label = data

print('shape of image: ',image.shape)

plt.title(str(label))

plt.imshow(image[:,:,0])

break

shape of image: (28, 28, 1)

2. Read the dataset iteratively

2.1 Use tensorlayerx.dataflow.DataLoader to define the data reader

Although the direct iteration method introduced above can achieve access to the dataset, this access method can only be performed in a single thread and batch needs to be manually divided (batch). In the PaddlePaddle framework, it is recommended to use the tensorlayerx.dataflow.DataLoader API to read the dataset in multiple processes, and automatically complete the work of dividing the batch.

# Define and initialize the data reader

train_loader = tlx.dataflow.DataLoader(train_dataset, batch_size=64, shuffle=True)

# Read the data iteratively using DataLoader

for batch_id, data in enumerate(train_loader):

images, labels = data

print("batch_id: {}, training data shape: {}, label data shape: {}".format(batch_id, images.shape, labels.shape))

break

batch_id: 0, training data shape: [64, 28, 28, 1], label data shape: [64]

By the above method, a data reader train_loader is initialized to load the training dataset custom_dataset. Several common fields in the data reader are as follows:

batch_size: The number of samples read in each batch. In the above example, batch_size=64 means that 64 samples are read in each batch.

shuffle: Sample shuffle. In the above example, shuffle=True means that the sample order is shuffled when the data is read to reduce the possibility of overfitting.

After the data reader is defined, it can be used for convenient iteration to read the batch data for model training with the for loop. It is worth noting that if the high-level API tlx.model.Model.train is used to read the dataset for training, only the dataset Dataset needs to be defined, and the DataLoader does not need to be defined separately, because the tlx.model.Model.train has actually encapsulated part of the DataLoader function, and the details can be referred to the Model Training, Evaluation and Inference section.

Note: DataLoader is actually generated by the batch sampler BatchSampler to generate a list of batch indexes, and the corresponding sample data in the Dataset is obtained according to the index to achieve the loading of batch data. The DataLoader defines the batch size and order of sampling, and the corresponding fields include batch_size and shuffle. These two fields can also be replaced by a batch_sampler field, and a custom batch_sampler instance can be passed in. Either of the above two methods can be selected, and the same effect can be achieved. The following section introduces the use of a custom sampler, which can define the sampling rules more flexibly.

3. Summary

This section introduces the processing process of data before sending it into the model training in the PaddlePaddle framework.

Mainly including two steps: defining the dataset and defining the data reader, and in the data reader, the sampler can be called to implement more flexible sampling. Among them, when defining the dataset, only normalization processing of the dataset is introduced in this section. For more data augmentation operations, please refer to the Data Processing section.

After all the data processing work is completed, the next task can be entered: model training, evaluation and inference.